Viewing the disk space usage (size) of all the tables in a SQL Server 2005/2008 database

BEGIN TRY

SELECT

(row_number() over(order by a3.name, a2.name))%2 as l1,

a3.name AS [schemaname],

a2.name AS [tablename],

a1.rows as row_count,

(a1.reserved + ISNULL(a4.reserved,0))* 8 AS reserved,

a1.data * 8 AS data,

(CASE WHEN (a1.used + ISNULL(a4.used,0)) > a1.data THEN

(a1.used + ISNULL(a4.used,0)) - a1.data ELSE 0 END) * 8 AS index_size,

(CASE WHEN (a1.reserved + ISNULL(a4.reserved,0)) > a1.used THEN

(a1.reserved + ISNULL(a4.reserved,0)) - a1.used ELSE 0 END) * 8 AS unused

FROM

(SELECT

ps.object_id,

SUM (

CASE

WHEN (ps.index_id < 2) THEN row_count

ELSE 0

END

) AS [rows],

SUM (ps.reserved_page_count) AS reserved,

SUM (

CASE

WHEN (ps.index_id < 2) THEN

(ps.in_row_data_page_count + ps.lob_used_page_count + ps.row_overflow_used_page_count)

ELSE (ps.lob_used_page_count + ps.row_overflow_used_page_count)

END

) AS data,

SUM (ps.used_page_count) AS used

FROM sys.dm_db_partition_stats ps

GROUP BY ps.object_id) AS a1

LEFT OUTER JOIN

(SELECT

it.parent_id,

SUM(ps.reserved_page_count) AS reserved,

SUM(ps.used_page_count) AS used

FROM sys.dm_db_partition_stats ps

INNER JOIN sys.internal_tables it ON (it.object_id = ps.object_id)

WHERE it.internal_type IN (202,204)

GROUP BY it.parent_id) AS a4 ON (a4.parent_id = a1.object_id)

INNER JOIN sys.all_objects a2 ON ( a1.object_id = a2.object_id )

INNER JOIN sys.schemas a3 ON (a2.schema_id = a3.schema_id)

WHERE a2.type <> 'S' and a2.type <> 'IT'

ORDER BY a3.name, a2.name

END TRY

BEGIN CATCH

SELECT

-100 as l1

, 1 as schemaname

, ERROR_NUMBER() as tablename

, ERROR_SEVERITY() as row_count

, ERROR_STATE() as reserved

, ERROR_MESSAGE() as data

, 1 as index_size

, 1 as unused

END CATCH

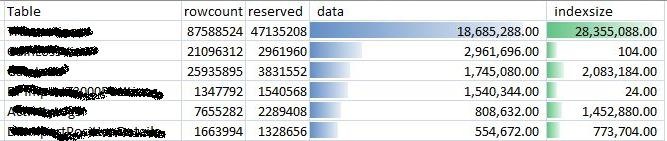

Quick extra tip, if you export the result-set to Excel you can do additional sorts to figure out more stuff. For example the first table on this list was taking up 47GB of disk space in a 63 GB database. With the index taking more space than the actual data! But this is more of an OLAP db with just one batch update daily and optimized for retrieving data faster so I guess that's okay.